Fixing Multi-App Publishing Pipelines (Final Cut → Compressor → Cloud Upload) With a File Event Queue Buffer

In today’s fast-paced video production environments, seamless and automated publishing pipelines are crucial for efficiency and consistency. Many creators rely on Apple’s Final Cut Pro for editing, Compressor for encoding, and various cloud services for distribution. However, when each tool operates independently in a multi-app workflow, complex inter-app timing and file handling issues can arise. Unmanaged file handoffs can lead to missed uploads, corrupted renders, or workflow bottlenecks.

TL;DR: This article explores a common challenge in multi-tool post-production workflows: ensuring timely and orderly communication between Final Cut Pro, Compressor, and cloud upload tasks. By inserting a file event queue buffer—essentially a process that monitors file changes and handles them in order—you can prevent workflow failures triggered by overlaps, delays, or retries. This approach improves reliability, automates retry logic, and brings a layer of observability that traditional workflows often lack. Whether you’re an indie filmmaker or in a high-volume studio, this fix can dramatically streamline your publishing.

Understanding the Problem

A typical video publishing pipeline might look like this:

- You finish editing in Final Cut Pro and export the project as a Master File.

- Master File is sent to Compressor for encoding into different resolutions and formats.

- The encoded files are uploaded manually or automatically to a cloud platform like Vimeo, YouTube, or a content delivery network (CDN).

While conceptually simple, each of these stages involves file system transitions and application calls that may not speak the same language or operate at the same speed:

- Final Cut exports large files, which may be written slowly over time.

- Compressor might try encoding a file before it’s finished writing.

- Cloud uploads might initiate before encoding is complete, resulting in broken videos online.

Since these operations often rely on file system events (e.g., a new file appearing in a folder), it’s easy for a system to mistake an in-process file for a finished one. This is where a file event queue buffer comes in to guardrail your flow.

What is a File Event Queue Buffer?

A file event queue buffer is an intermediary layer—usually a small program or script—that watches for file events like creations or modifications in a target folder. Rather than immediately triggering the next tool (like Compressor), it queues the event and waits until the file is fully written and stable. Only then does it forward the file down the pipeline. Think of it like a digital airlock for your media files.

Here’s a simplified architecture:

- File Watcher: Monitors for changes in the “Exports” directory.

- Stabilization Timer: Introduces a configurable wait window to ensure the file isn’t still being written.

- Queue Buffer: Holds file events until they’re verified as stable.

- Trigger Script: Hands off the clean file to Compressor or the next process.

Why Simple “Watch Folder” Logic Doesn’t Cut It

Most video professionals assume that using a watch folder logic—that is, having Compressor or a script poll a folder for new files—is sufficient. This often leads to:

- Race conditions: Two apps trying to read or write to a file simultaneously.

- Incomplete uploads: Partially written files being sent to cloud services without checks.

- Encoding errors: Compressor failing silently because it was invoked prematurely.

By the time you realize a file didn’t publish properly, hours may be lost re-exporting, re-encoding, and re-uploading. Often, you won’t even know where the error occurred because no single application had full visibility of the pipeline end-to-end.

How the File Event Queue Buffer Fixes the Pipeline

The queue buffer resolves these challenges through four key actions:

- Validation: Verifies that a newly exported file is both complete and not being written to anymore (e.g., no size changes for X seconds).

- Serialization: Ensures only one file moves through the pipeline at a time, avoiding workload collisions.

- Retry Logic: If encoding or upload fails, the buffer can retry after a timeout or flag the failure for attention.

- Logging: Keeps a record of every step, timestamped, and status-coded.

This approach effectively turns your erratic multi-app process into a sequentially managed pipeline, with built-in checkpointing.

How To Implement Your Own File Queue Buffer

You don’t need to invest in enterprise systems to build this. Here’s a possible DIY setup:

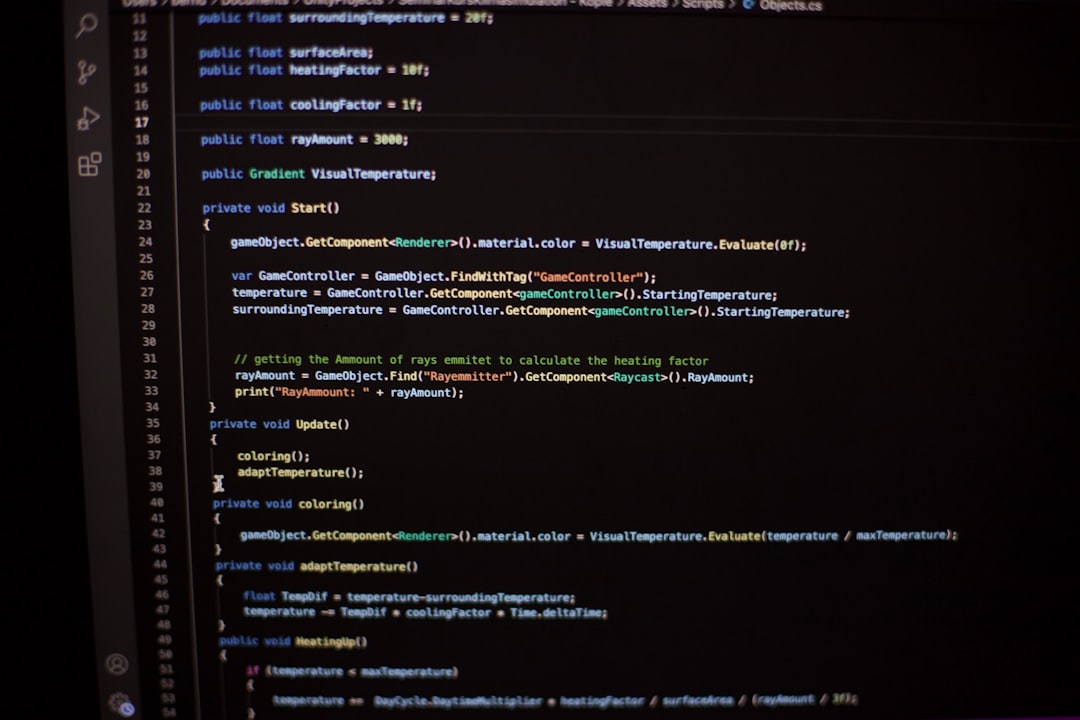

1. Use a Language Like Python, Node.js or Swift

Each of these has libraries that can monitor the file system:

watchdogfor Pythonfs.watch()in Node.jsFileSystemEventsAPIs in macOS for Swift

Set up your script to watch the Final Cut “Exports” folder and initiate a stabilization timer when a new file is detected.

2. Check for File Stability

You can write logic to check file size repeatedly over time to ensure it isn’t growing before marking it “ready.”

import os

import time

def is_file_stable(filepath, wait=10, interval=1):

last_size = -1

stable_count = 0

while stable_count < wait:

current_size = os.path.getsize(filepath)

if current_size == last_size:

stable_count += 1

else:

stable_count = 0

last_size = current_size

time.sleep(interval)

return True

3. Trigger Compressor via Command Line

You can use AppleScript or command-line utilities like batchmonitor or automator to integrate Compressor automation. Apple offers scripting documentation to aid this.

4. Upload Automatically After Encoding

Output from Compressor can be automatically moved into a second trigger folder. The script can use cloud APIs (e.g., Vimeo, AWS S3, or Cloudflare) to upload verified files post-encoding.

Bonus: Add Email or Chat Notifications

Integrate push notifications through Slack webhooks or email alerts so you’re aware when a new file finishes its entire lifecycle: export, encode, and upload. Troubleshooting becomes easier with this observability layer.

Real-World Impact: Case Study

At a boutique design studio in LA, the creative team used to miss deadlines because uploads would silently fail overnight. By introducing a Python-based file queue buffer between Final Cut Pro exports and automated uploads, they saw a 0% failure rate over a 6-week test period. All videos uploaded correctly and on time, with built-in retry logic recovering from CDN hiccups and transcoding delays. More importantly, the team could go home confident that the system would handle the night shift without human intervention.

Conclusion

Multi-app pipelines in modern video production can be a blessing and a curse. While each tool—Final Cut, Compressor, cloud APIs—excels at its task, disconnects between them can jeopardize the whole workflow. A file event queue buffer acts as the translator, traffic cop, and watchdog all in one. Implementing one may involve a bit of scripting or automation work, but the payoff in reliability, stability, and scalability is well worth the investment.

Whether you’re shipping five videos a week or five hundred, it’s time to stop relying on guesswork and folders alone to glue your tools together. Let the buffer take the load—so your creativity stays center stage.

Comments are closed.